Introduction of GFS history data¶

[1]:

def print_dict(d, indent=0):

for key, value in d.items():

if isinstance(value, dict):

print(' ' * indent + f"\033[1;32m{key}:\033[0m") #\033[1;32m{k}:\033[0m

print_dict(value, indent + 4)

else:

print(' ' * indent + f"\033[1;32m{key}:\033[0m {value if value is not None else 'null'}")

[2]:

import intake

import pandas as pd

import xarray as xr

import warnings

warnings.filterwarnings('ignore')

# open metadata description file

cat = intake.open_catalog('intake-yaml/his_gfs.yaml')

cat.date = '2021032312'

print(print_dict(cat.metadata))

# check group in the file

print(f'There are groups: {list(cat)}')

#print('each detail are below:\n')

# metadata for a group

#for g in list(cat):

# print(f'=====Information for group {g}=====')

# print(cat[g])

# read a group into dask

#cat['forecast'](date='2021032312').to_dask() #cat.forecast

description: GFS history data over China range. Downloaded from NCEP. Starts from 2021-3-23. Access by {endpoint}/{batch_folders}. Due to their different time length, variables are devided into three groups(anl, f000 and forecast). In these groups variables are different.

version: 1

stored:

tag: GFS_HIS

endpoint: us3://nwp/gfs/history

batch_folders: gfs.{YYYYMMDD}{00/12}.zarr

catalog: NWP|GFS|HISTORY

var_dims: var(time, latitude, longitude)

grid_type: regular_ll

latitude: 0,60

longitude: 70,140

lat_inc: 0.25

lon_inc: 0.25

time_list: time[anl, range(1,121), range(123,385,3)]

format: zarr

batch: [0, 12]

arrive_time: not set

produced_by: NCEP

up: not set

down: not set

filename_rule: gfs.{YYYYMMDD}{00/12}.zarr

office_web: not set

index_all: 210 files per batch

history_time: since 2021-3-23

history_dize: under calculation

history_path: us3://nwp/gfs/history

history_format: zarr

references: null

variables: check below

driver: zarr

process: null

log: null

source: download from NCEP

storage_policy: zip zarr on us3

origin:

url: s3://noaa-gfs-bdp-pds/gfs.{YYYYMMDD}/{batch}/atmos/gfs.t00z.pgrb2.0p25.[anl|f{forecast_hour}]

download_method: awscli cp --no-sign-request

filename_rule: same name in each day's directory. forecast_hour range(0,121,1), range(123,385,3)

format: grib2

batch: 00/06/12/18 daily

variables: check below

space_inc: 0.25

official_introduction: null

parameters:

endpoint:

type: str

description: The base directory for the data. Usually it's the first farther directory of daily sub-directory.

default: /data/sample_data/

date:

type: str

description: The date to read data, a str with format 'YYYYMMDDbb', bb is the batch 00 or 12, default is '2021032300'

default: 2021032312

reference: null

None

There are groups: ['anl', 'f000', 'forecast']

[3]:

import ipywidgets as widgets # 控件库

from IPython.display import display # 显示控件的方法

import xarray

base = cat.user_parameters['endpoint'].default

batch = cat.user_parameters['date'].default

ds = xr.open_dataset(f"{base}gfs.{batch}.zarr.zip", group='forecast', engine='zarr', consolidated=True)

#print(ds)

print('information for group forecast')

cat['forecast'](date='2021032312').to_dask()

print("以下为group forecast的查询,请输入变量后回车查询,或从列表中选择变量查询")

output = widgets.Output()

text = widgets.Text()

def print_value(sender):

with output:

#cat['forecast'](date='2021032312').to_dask()[sender.value] #cat.forecast

if sender.value in ds.data_vars.keys():

print(ds[sender.value])

else:

print(f"no such variable")

text.on_submit(print_value)

def chosen(_):

with output:

print(ds[dpd.value])

variable_names = ds.data_vars.keys()

dpd = widgets.Dropdown(

options=variable_names,

disabled=False,

)

dpd.observe(chosen, names="value")

out = widgets.Output(layout={'border': '1px solid black'})

clear_button = widgets.Button(description='Clear Output')

clear_button.on_click(lambda x:output.clear_output())

display(clear_button)

display(text, dpd)

display(output)

information for group forecast

以下为group forecast的查询,请输入变量后回车查询,或从列表中选择变量查询

[4]:

print('sample of time series')

print(ds['DPT_2maboveground']['time'].values)

sample of time series

['2021-03-23T13:00:00.000000000' '2021-03-23T14:00:00.000000000'

'2021-03-23T15:00:00.000000000' '2021-03-23T16:00:00.000000000'

'2021-03-23T17:00:00.000000000' '2021-03-23T18:00:00.000000000'

'2021-03-23T19:00:00.000000000' '2021-03-23T20:00:00.000000000'

'2021-03-23T21:00:00.000000000' '2021-03-23T22:00:00.000000000'

'2021-03-23T23:00:00.000000000' '2021-03-24T00:00:00.000000000'

'2021-03-24T01:00:00.000000000' '2021-03-24T02:00:00.000000000'

'2021-03-24T03:00:00.000000000' '2021-03-24T04:00:00.000000000'

'2021-03-24T05:00:00.000000000' '2021-03-24T06:00:00.000000000'

'2021-03-24T07:00:00.000000000' '2021-03-24T08:00:00.000000000'

'2021-03-24T09:00:00.000000000' '2021-03-24T10:00:00.000000000'

'2021-03-24T11:00:00.000000000' '2021-03-24T12:00:00.000000000'

'2021-03-24T13:00:00.000000000' '2021-03-24T14:00:00.000000000'

'2021-03-24T15:00:00.000000000' '2021-03-24T16:00:00.000000000'

'2021-03-24T17:00:00.000000000' '2021-03-24T18:00:00.000000000'

'2021-03-24T19:00:00.000000000' '2021-03-24T20:00:00.000000000'

'2021-03-24T21:00:00.000000000' '2021-03-24T22:00:00.000000000'

'2021-03-24T23:00:00.000000000' '2021-03-25T00:00:00.000000000'

'2021-03-25T01:00:00.000000000' '2021-03-25T02:00:00.000000000'

'2021-03-25T03:00:00.000000000' '2021-03-25T04:00:00.000000000'

'2021-03-25T05:00:00.000000000' '2021-03-25T06:00:00.000000000'

'2021-03-25T07:00:00.000000000' '2021-03-25T08:00:00.000000000'

'2021-03-25T09:00:00.000000000' '2021-03-25T10:00:00.000000000'

'2021-03-25T11:00:00.000000000' '2021-03-25T12:00:00.000000000'

'2021-03-25T13:00:00.000000000' '2021-03-25T14:00:00.000000000'

'2021-03-25T15:00:00.000000000' '2021-03-25T16:00:00.000000000'

'2021-03-25T17:00:00.000000000' '2021-03-25T18:00:00.000000000'

'2021-03-25T19:00:00.000000000' '2021-03-25T20:00:00.000000000'

'2021-03-25T21:00:00.000000000' '2021-03-25T22:00:00.000000000'

'2021-03-25T23:00:00.000000000' '2021-03-26T00:00:00.000000000'

'2021-03-26T01:00:00.000000000' '2021-03-26T02:00:00.000000000'

'2021-03-26T03:00:00.000000000' '2021-03-26T04:00:00.000000000'

'2021-03-26T05:00:00.000000000' '2021-03-26T06:00:00.000000000'

'2021-03-26T07:00:00.000000000' '2021-03-26T08:00:00.000000000'

'2021-03-26T09:00:00.000000000' '2021-03-26T10:00:00.000000000'

'2021-03-26T11:00:00.000000000' '2021-03-26T12:00:00.000000000'

'2021-03-26T13:00:00.000000000' '2021-03-26T14:00:00.000000000'

'2021-03-26T15:00:00.000000000' '2021-03-26T16:00:00.000000000'

'2021-03-26T17:00:00.000000000' '2021-03-26T18:00:00.000000000'

'2021-03-26T19:00:00.000000000' '2021-03-26T20:00:00.000000000'

'2021-03-26T21:00:00.000000000' '2021-03-26T22:00:00.000000000'

'2021-03-26T23:00:00.000000000' '2021-03-27T00:00:00.000000000'

'2021-03-27T01:00:00.000000000' '2021-03-27T02:00:00.000000000'

'2021-03-27T03:00:00.000000000' '2021-03-27T04:00:00.000000000'

'2021-03-27T05:00:00.000000000' '2021-03-27T06:00:00.000000000'

'2021-03-27T07:00:00.000000000' '2021-03-27T08:00:00.000000000'

'2021-03-27T09:00:00.000000000' '2021-03-27T10:00:00.000000000'

'2021-03-27T11:00:00.000000000' '2021-03-27T12:00:00.000000000'

'2021-03-27T13:00:00.000000000' '2021-03-27T14:00:00.000000000'

'2021-03-27T15:00:00.000000000' '2021-03-27T16:00:00.000000000'

'2021-03-27T17:00:00.000000000' '2021-03-27T18:00:00.000000000'

'2021-03-27T19:00:00.000000000' '2021-03-27T20:00:00.000000000'

'2021-03-27T21:00:00.000000000' '2021-03-27T22:00:00.000000000'

'2021-03-27T23:00:00.000000000' '2021-03-28T00:00:00.000000000'

'2021-03-28T01:00:00.000000000' '2021-03-28T02:00:00.000000000'

'2021-03-28T03:00:00.000000000' '2021-03-28T04:00:00.000000000'

'2021-03-28T05:00:00.000000000' '2021-03-28T06:00:00.000000000'

'2021-03-28T07:00:00.000000000' '2021-03-28T08:00:00.000000000'

'2021-03-28T09:00:00.000000000' '2021-03-28T10:00:00.000000000'

'2021-03-28T11:00:00.000000000' '2021-03-28T12:00:00.000000000'

'2021-03-28T15:00:00.000000000' '2021-03-28T18:00:00.000000000'

'2021-03-28T21:00:00.000000000' '2021-03-29T00:00:00.000000000'

'2021-03-29T03:00:00.000000000' '2021-03-29T06:00:00.000000000'

'2021-03-29T09:00:00.000000000' '2021-03-29T12:00:00.000000000'

'2021-03-29T15:00:00.000000000' '2021-03-29T18:00:00.000000000'

'2021-03-29T21:00:00.000000000' '2021-03-30T00:00:00.000000000'

'2021-03-30T03:00:00.000000000' '2021-03-30T06:00:00.000000000'

'2021-03-30T09:00:00.000000000' '2021-03-30T12:00:00.000000000'

'2021-03-30T15:00:00.000000000' '2021-03-30T18:00:00.000000000'

'2021-03-30T21:00:00.000000000' '2021-03-31T00:00:00.000000000'

'2021-03-31T03:00:00.000000000' '2021-03-31T06:00:00.000000000'

'2021-03-31T09:00:00.000000000' '2021-03-31T12:00:00.000000000'

'2021-03-31T15:00:00.000000000' '2021-03-31T18:00:00.000000000'

'2021-03-31T21:00:00.000000000' '2021-04-01T00:00:00.000000000'

'2021-04-01T03:00:00.000000000' '2021-04-01T06:00:00.000000000'

'2021-04-01T09:00:00.000000000' '2021-04-01T12:00:00.000000000'

'2021-04-01T15:00:00.000000000' '2021-04-01T18:00:00.000000000'

'2021-04-01T21:00:00.000000000' '2021-04-02T00:00:00.000000000'

'2021-04-02T03:00:00.000000000' '2021-04-02T06:00:00.000000000'

'2021-04-02T09:00:00.000000000' '2021-04-02T12:00:00.000000000'

'2021-04-02T15:00:00.000000000' '2021-04-02T18:00:00.000000000'

'2021-04-02T21:00:00.000000000' '2021-04-03T00:00:00.000000000'

'2021-04-03T03:00:00.000000000' '2021-04-03T06:00:00.000000000'

'2021-04-03T09:00:00.000000000' '2021-04-03T12:00:00.000000000'

'2021-04-03T15:00:00.000000000' '2021-04-03T18:00:00.000000000'

'2021-04-03T21:00:00.000000000' '2021-04-04T00:00:00.000000000'

'2021-04-04T03:00:00.000000000' '2021-04-04T06:00:00.000000000'

'2021-04-04T09:00:00.000000000' '2021-04-04T12:00:00.000000000'

'2021-04-04T15:00:00.000000000' '2021-04-04T18:00:00.000000000'

'2021-04-04T21:00:00.000000000' '2021-04-05T00:00:00.000000000'

'2021-04-05T03:00:00.000000000' '2021-04-05T06:00:00.000000000'

'2021-04-05T09:00:00.000000000' '2021-04-05T12:00:00.000000000'

'2021-04-05T15:00:00.000000000' '2021-04-05T18:00:00.000000000'

'2021-04-05T21:00:00.000000000' '2021-04-06T00:00:00.000000000'

'2021-04-06T03:00:00.000000000' '2021-04-06T06:00:00.000000000'

'2021-04-06T09:00:00.000000000' '2021-04-06T12:00:00.000000000'

'2021-04-06T15:00:00.000000000' '2021-04-06T18:00:00.000000000'

'2021-04-06T21:00:00.000000000' '2021-04-07T00:00:00.000000000'

'2021-04-07T03:00:00.000000000' '2021-04-07T06:00:00.000000000'

'2021-04-07T09:00:00.000000000' '2021-04-07T12:00:00.000000000'

'2021-04-07T15:00:00.000000000' '2021-04-07T18:00:00.000000000'

'2021-04-07T21:00:00.000000000' '2021-04-08T00:00:00.000000000'

'2021-04-08T03:00:00.000000000' '2021-04-08T06:00:00.000000000'

'2021-04-08T09:00:00.000000000' '2021-04-08T12:00:00.000000000']

[5]:

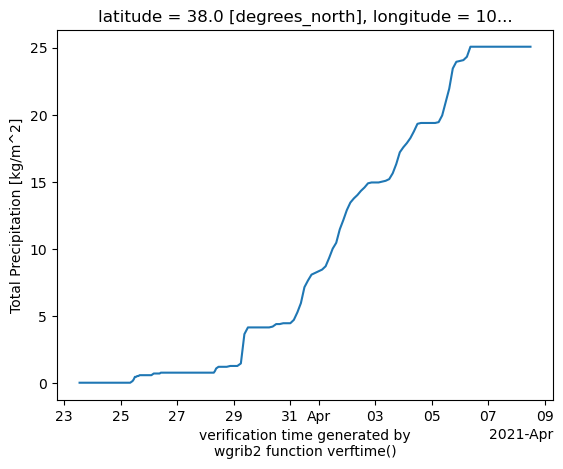

lat, lon = 38, 100

var = 'APCP_surface'

print(f'times series plot for {var}')

ds[var].sel(latitude=lat, longitude=lon).sortby('time').plot()

times series plot for APCP_surface

[5]:

[<matplotlib.lines.Line2D at 0x70811651adb0>]

[6]:

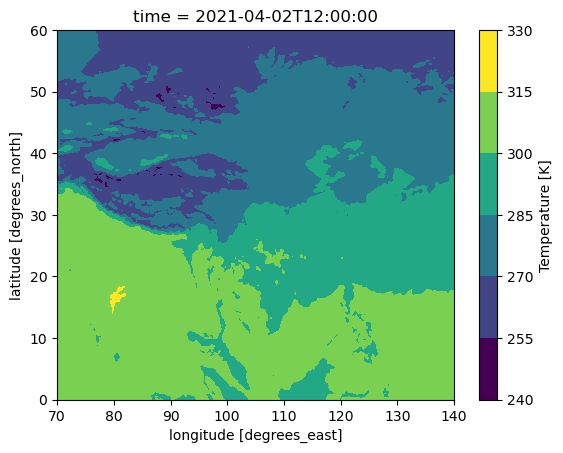

var = 'TMP_2maboveground'

time = '2021-04-02T12:00:00.000000000'

print(f'2D map for {var} at {time}')

ds[var].sortby('time').sel(time=time).plot.contourf()

2D map for TMP_2maboveground at 2021-04-02T12:00:00.000000000

[6]:

<matplotlib.contour.QuadContourSet at 0x70811c505d90>

[7]:

def print_dict(d, indent=0):

for key, value in d.items():

if isinstance(value, dict):

print(' ' * indent + f"\033[1;32m{key}:\033[0m") #\033[1;32m{k}:\033[0m

print_dict(value, indent + 4)

else:

print(' ' * indent + f"\033[1;32m{key}:\033[0m {value if value is not None else 'null'}")

[8]:

print('information for group anl')

cat['anl'](date='2021032312').to_dask()

information for group anl

[8]:

<xarray.Dataset> Size: 35MB

Dimensions: (time: 1, latitude: 241, longitude: 281)

Coordinates:

* latitude (latitude) float64 2kB 0.0 0.25 0.5 ... 59.75 60.0

* longitude (longitude) float64 2kB 70.0 70.25 ... 139.8 140.0

* time (time) datetime64[ns] 8B 2021-03-23T12:00:00

Data variables: (12/128)

HGT_1000mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

HGT_100mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

HGT_150mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

HGT_200mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

HGT_250mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

HGT_300mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

... ...

VGRD_750mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_800mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_80maboveground (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_850mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_900mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_950mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

Attributes:

Conventions: COARDS

GRIB2_grid_template: 0

History: created by wgrib2xarray.Dataset

- time: 1

- latitude: 241

- longitude: 281

- latitude(latitude)float640.0 0.25 0.5 ... 59.5 59.75 60.0

- long_name :

- latitude

- units :

- degrees_north

array([ 0. , 0.25, 0.5 , ..., 59.5 , 59.75, 60. ])

- longitude(longitude)float6470.0 70.25 70.5 ... 139.8 140.0

- long_name :

- longitude

- units :

- degrees_east

array([ 70. , 70.25, 70.5 , ..., 139.5 , 139.75, 140. ])

- time(time)datetime64[ns]2021-03-23T12:00:00

- long_name :

- verification time generated by wgrib2 function verftime()

- reference_date :

- 2021.03.23 12:00:00 UTC

- reference_time :

- 1616500800.0

- reference_time_description :

- analyses, reference date is fixed

- reference_time_type :

- 1

- time_step :

- 0.0

- time_step_setting :

- auto

array(['2021-03-23T12:00:00.000000000'], dtype='datetime64[ns]')

- HGT_1000mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 1000 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_1000mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_100mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_100mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_150mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 150 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_150mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_200mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 200 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_200mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_250mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 250 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_250mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_300mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 300 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_300mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_350mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 350 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_350mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_400mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 400 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_400mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_450mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 450 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_450mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_500mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 500 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_500mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_550mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 550 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_550mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_600mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 600 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_600mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_650mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 650 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_650mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_700mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 700 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_700mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_750mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 750 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_750mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_800mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 800 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_800mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_850mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 850 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_850mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_900mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 900 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_900mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_950mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 950 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_950mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - PRES_surface(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- surface

- long_name :

- Pressure

- short_name :

- PRES_surface

- units :

- Pa

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - PRMSL_meansealevel(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- mean sea level

- long_name :

- Pressure Reduced to MSL

- short_name :

- PRMSL_meansealevel

- units :

- Pa

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_1000mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 1000 mb

- long_name :

- Relative Humidity

- short_name :

- RH_1000mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_100mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 mb

- long_name :

- Relative Humidity

- short_name :

- RH_100mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_150mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 150 mb

- long_name :

- Relative Humidity

- short_name :

- RH_150mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_200mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 200 mb

- long_name :

- Relative Humidity

- short_name :

- RH_200mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_250mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 250 mb

- long_name :

- Relative Humidity

- short_name :

- RH_250mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_300mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 300 mb

- long_name :

- Relative Humidity

- short_name :

- RH_300mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_350mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 350 mb

- long_name :

- Relative Humidity

- short_name :

- RH_350mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_400mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 400 mb

- long_name :

- Relative Humidity

- short_name :

- RH_400mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_450mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 450 mb

- long_name :

- Relative Humidity

- short_name :

- RH_450mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_500mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 500 mb

- long_name :

- Relative Humidity

- short_name :

- RH_500mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_550mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 550 mb

- long_name :

- Relative Humidity

- short_name :

- RH_550mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_600mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 600 mb

- long_name :

- Relative Humidity

- short_name :

- RH_600mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_650mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 650 mb

- long_name :

- Relative Humidity

- short_name :

- RH_650mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_700mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 700 mb

- long_name :

- Relative Humidity

- short_name :

- RH_700mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_750mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 750 mb

- long_name :

- Relative Humidity

- short_name :

- RH_750mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_800mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 800 mb

- long_name :

- Relative Humidity

- short_name :

- RH_800mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_850mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 850 mb

- long_name :

- Relative Humidity

- short_name :

- RH_850mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_900mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 900 mb

- long_name :

- Relative Humidity

- short_name :

- RH_900mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - RH_950mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 950 mb

- long_name :

- Relative Humidity

- short_name :

- RH_950mb

- units :

- percent

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_1000mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 1000 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_1000mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_100mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_100mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_150mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 150 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_150mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_200mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 200 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_200mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_250mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 250 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_250mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_300mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 300 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_300mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_350mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 350 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_350mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_400mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 400 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_400mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_450mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 450 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_450mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_500mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 500 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_500mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_550mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 550 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_550mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_600mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 600 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_600mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_650mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 650 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_650mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_700mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 700 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_700mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_750mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 750 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_750mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_800mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 800 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_800mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_850mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 850 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_850mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_900mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 900 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_900mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - SPFH_950mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 950 mb

- long_name :

- Specific Humidity

- short_name :

- SPFH_950mb

- units :

- kg/kg

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_1000mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 1000 mb

- long_name :

- Temperature

- short_name :

- TMP_1000mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_100mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 mb

- long_name :

- Temperature

- short_name :

- TMP_100mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_150mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 150 mb

- long_name :

- Temperature

- short_name :

- TMP_150mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_200mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 200 mb

- long_name :

- Temperature

- short_name :

- TMP_200mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_250mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 250 mb

- long_name :

- Temperature

- short_name :

- TMP_250mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_300mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 300 mb

- long_name :

- Temperature

- short_name :

- TMP_300mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_350mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 350 mb

- long_name :

- Temperature

- short_name :

- TMP_350mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_400mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 400 mb

- long_name :

- Temperature

- short_name :

- TMP_400mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_450mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 450 mb

- long_name :

- Temperature

- short_name :

- TMP_450mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_500mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 500 mb

- long_name :

- Temperature

- short_name :

- TMP_500mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_550mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 550 mb

- long_name :

- Temperature

- short_name :

- TMP_550mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_600mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 600 mb

- long_name :

- Temperature

- short_name :

- TMP_600mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_650mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 650 mb

- long_name :

- Temperature

- short_name :

- TMP_650mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_700mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 700 mb

- long_name :

- Temperature

- short_name :

- TMP_700mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_750mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 750 mb

- long_name :

- Temperature

- short_name :

- TMP_750mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_800mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 800 mb

- long_name :

- Temperature

- short_name :

- TMP_800mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_850mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 850 mb

- long_name :

- Temperature

- short_name :

- TMP_850mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_900mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 900 mb

- long_name :

- Temperature

- short_name :

- TMP_900mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - TMP_950mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 950 mb

- long_name :

- Temperature

- short_name :

- TMP_950mb

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_1000mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 1000 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_1000mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_100maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 m above ground

- long_name :

- U-Component of Wind

- short_name :

- UGRD_100maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_100mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_100mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_150mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 150 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_150mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_200mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 200 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_200mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_20maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 20 m above ground

- long_name :

- U-Component of Wind

- short_name :

- UGRD_20maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_250mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 250 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_250mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_300mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 300 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_300mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_30maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 30 m above ground

- long_name :

- U-Component of Wind

- short_name :

- UGRD_30maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_350mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 350 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_350mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_400mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 400 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_400mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_40maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 40 m above ground

- long_name :

- U-Component of Wind

- short_name :

- UGRD_40maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_450mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 450 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_450mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_500mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 500 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_500mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_50maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 50 m above ground

- long_name :

- U-Component of Wind

- short_name :

- UGRD_50maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_550mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 550 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_550mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_600mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 600 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_600mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_650mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 650 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_650mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_700mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 700 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_700mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_750mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 750 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_750mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_800mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 800 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_800mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_80maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 80 m above ground

- long_name :

- U-Component of Wind

- short_name :

- UGRD_80maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_850mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 850 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_850mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_900mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 900 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_900mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - UGRD_950mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 950 mb

- long_name :

- U-Component of Wind

- short_name :

- UGRD_950mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_1000mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 1000 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_1000mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_100maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 m above ground

- long_name :

- V-Component of Wind

- short_name :

- VGRD_100maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_100mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_100mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_150mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 150 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_150mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_200mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 200 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_200mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_20maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 20 m above ground

- long_name :

- V-Component of Wind

- short_name :

- VGRD_20maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_250mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 250 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_250mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_300mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 300 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_300mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_30maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 30 m above ground

- long_name :

- V-Component of Wind

- short_name :

- VGRD_30maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_350mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 350 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_350mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_400mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 400 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_400mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_40maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 40 m above ground

- long_name :

- V-Component of Wind

- short_name :

- VGRD_40maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_450mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 450 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_450mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_500mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 500 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_500mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_50maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 50 m above ground

- long_name :

- V-Component of Wind

- short_name :

- VGRD_50maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_550mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 550 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_550mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_600mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 600 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_600mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_650mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 650 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_650mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_700mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 700 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_700mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_750mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 750 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_750mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_800mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 800 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_800mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_80maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 80 m above ground

- long_name :

- V-Component of Wind

- short_name :

- VGRD_80maboveground

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_850mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 850 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_850mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_900mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 900 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_900mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - VGRD_950mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 950 mb

- long_name :

- V-Component of Wind

- short_name :

- VGRD_950mb

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray

- latitudePandasIndex

PandasIndex(Index([ 0.0, 0.25, 0.5, 0.75, 1.0, 1.25, 1.5, 1.75, 2.0, 2.25, ... 57.75, 58.0, 58.25, 58.5, 58.75, 59.0, 59.25, 59.5, 59.75, 60.0], dtype='float64', name='latitude', length=241)) - longitudePandasIndex

PandasIndex(Index([ 70.0, 70.25, 70.5, 70.75, 71.0, 71.25, 71.5, 71.75, 72.0, 72.25, ... 137.75, 138.0, 138.25, 138.5, 138.75, 139.0, 139.25, 139.5, 139.75, 140.0], dtype='float64', name='longitude', length=281)) - timePandasIndex

PandasIndex(DatetimeIndex(['2021-03-23 12:00:00'], dtype='datetime64[ns]', name='time', freq=None))

- Conventions :

- COARDS

- GRIB2_grid_template :

- 0

- History :

- created by wgrib2

[9]:

print('information for group f000')

cat['f000'](date='2021032312').to_dask()

information for group f000

[9]:

<xarray.Dataset> Size: 37MB

Dimensions: (time: 1, latitude: 241, longitude: 281)

Coordinates:

* latitude (latitude) float64 2kB 0.0 0.25 0.5 ... 59.75 60.0

* longitude (longitude) float64 2kB 70.0 70.25 ... 139.8 140.0

* time (time) datetime64[ns] 8B 2021-03-23T12:00:00

Data variables: (12/138)

APTMP_2maboveground (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

CRAIN_surface (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

DPT_2maboveground (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

FRICV_surface (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

GUST_surface (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

HGT_1000mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

... ...

VGRD_750mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_800mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_80maboveground (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_850mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_900mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

VGRD_950mb (time, latitude, longitude) float32 271kB dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

Attributes:

Conventions: COARDS

GRIB2_grid_template: 0

History: created by wgrib2xarray.Dataset

- time: 1

- latitude: 241

- longitude: 281

- latitude(latitude)float640.0 0.25 0.5 ... 59.5 59.75 60.0

- long_name :

- latitude

- units :

- degrees_north

array([ 0. , 0.25, 0.5 , ..., 59.5 , 59.75, 60. ])

- longitude(longitude)float6470.0 70.25 70.5 ... 139.8 140.0

- long_name :

- longitude

- units :

- degrees_east

array([ 70. , 70.25, 70.5 , ..., 139.5 , 139.75, 140. ])

- time(time)datetime64[ns]2021-03-23T12:00:00

- long_name :

- verification time generated by wgrib2 function verftime()

- reference_date :

- 2021.03.23 12:00:00 UTC

- reference_time :

- 1616500800.0

- reference_time_description :

- analyses, reference date is fixed

- reference_time_type :

- 1

- time_step :

- 0.0

- time_step_setting :

- auto

array(['2021-03-23T12:00:00.000000000'], dtype='datetime64[ns]')

- APTMP_2maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 2 m above ground

- long_name :

- Apparent Temperature

- short_name :

- APTMP_2maboveground

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - CRAIN_surface(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- surface

- long_name :

- Categorical Rain

- short_name :

- CRAIN_surface

- units :

- -

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - DPT_2maboveground(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 2 m above ground

- long_name :

- Dew Point Temperature

- short_name :

- DPT_2maboveground

- units :

- K

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - FRICV_surface(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- surface

- long_name :

- Frictional Velocity

- short_name :

- FRICV_surface

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - GUST_surface(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- surface

- long_name :

- Wind Speed (Gust)

- short_name :

- GUST_surface

- units :

- m/s

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_1000mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 1000 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_1000mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_100mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 100 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_100mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_150mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 150 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_150mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_200mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 200 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_200mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_250mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 250 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_250mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_300mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 300 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_300mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_350mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 350 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_350mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_400mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 400 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_400mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_450mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 450 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_450mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_500mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 500 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_500mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_550mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 550 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_550mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_600mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 600 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_600mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_650mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 650 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_650mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_700mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 700 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_700mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_750mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 750 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_750mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_800mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 800 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_800mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_850mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 850 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_850mb

- units :

- m

Array Chunk Bytes 264.54 kiB 132.82 kiB Shape (1, 241, 281) (1, 121, 281) Dask graph 2 chunks in 2 graph layers Data type float32 numpy.ndarray - HGT_900mb(time, latitude, longitude)float32dask.array<chunksize=(1, 121, 281), meta=np.ndarray>

- level :

- 900 mb

- long_name :

- Geopotential Height

- short_name :

- HGT_900mb

- units :

- m